Another blog post inspired by StackOverflow question. This time it's all about LINQ, performance and a tiny little detail, that really matters. The question itself is about yield keyword in VB.NET, but there is another, much more interesting part I'd like to examine. The algorithm quoted in the question is the key.

The idea is simple. How to partition a collection into parts with given number of elements in ever part? Algorithm presented in the question is as easy as the questions seems to be:

public IEnumerable<IEnumerable<T>> Partition<T>(IEnumerable<T> source, int size)

{

if (source == null)

throw new ArgumentNullException("list");

if (size < 1)

throw new ArgumentOutOfRangeException("size");

int index = 1;

IEnumerable<T> partition = source.Take(size).AsEnumerable();

while (partition.Any())

{

yield return partition;

partition = source.Skip(index++ * size).Take(size).AsEnumerable();

}

}public IEnumerable<IEnumerable<T>> Partition<T>(IEnumerable<T> source, int size)

{

var partition = new List<T>(size);

var counter = 0;

using (var enumerator = source.GetEnumerator())

{

while (enumerator.MoveNext())

{

partition.Add(enumerator.Current);

counter++;

if (counter % size == 0)

{

yield return partition.ToList();

partition.Clear();

counter = 0;

}

}

if (counter != 0)

yield return partition;

}

}- because it does no use Skip/Take methods

- because it has O(n) complexity, when the other one is O(n*log(n))

- because it iterate over entire collection only once, and the other one does it multiple times

var enumSource = Enumerable.Range(0, size);

var arraySource = enumSource.ToArray();

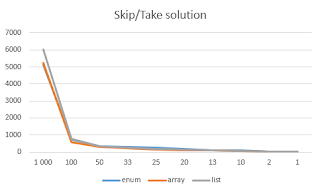

var listSource = arraySource.ToList();Y-axis shows execution time and X-axis shows number of partitions that are being generated. The difference is huge, isn't it? It takes about 6 second to generate 1000 partitions using Skip/Take algorithm and about 40ms to do that with the other one!

I'm writing this post to highlight that if LINQ seems to be really great solution for nearly every collection-related problem and you can get working solution really easily using it, it's not always really a good and fast solution.

No comments:

Post a Comment